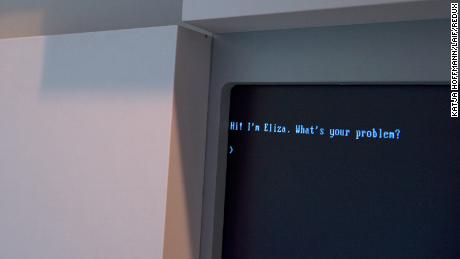

For Weizenbaum, this fact was cause for concern, according to a 2008 MIT obituary. Those who contacted Elisa were willing to open their hearts to her, even though they knew she was a computer program. Last but not least, Elijah shows how easy it is to create and maintain the illusion of understanding and thus possibly judgment “He deserves credibility,” Wizenbaum wrote in 1966. “There’s a certain danger lurking there.” He spent the end of his career warning against giving too much responsibility to machines and became a staunch philosophical critic of artificial intelligence.

Even before this, our complicated relationship with artificial intelligence and machines was evident in the plots of Hollywood movies like Har or Ex-Machine, not to mention harmful conversations with people who insist on saying “thank you” to voice assistants like Alexa or Siri. .

Meanwhile, others warn that the technology behind AI-powered chatbots is more limited than some. “These technologies are really good at imitating humans, and they look human, but they’re not deep,” says Gary Marcus, an artificial intelligence researcher and professor emeritus at New York University. “It’s a simulation, these systems, but it’s a superficial simulation. They don’t really understand what they’re talking about.”

However, as these services spread into more areas of our lives, and companies take steps to further customize these tools, our relationships with them may also become increasingly complex.

Development of chatbots

Sanjeev b. He remembers a conversation he had with Eliza while finishing school in Khodanpur. Despite its historical importance in the technology industry, it didn’t take long to recognize its limitations.

Khodanpour, an expert in the application of theoretical computer science methods to human language technology and a professor at Johns Hopkins University.

But in the decades following these tools, the concept of “talking to computers” has drifted away. That’s “because the problem has proven too difficult,” Khodanpour said. Instead, the focus has shifted to a “target-based dialogue,” he said.

To understand the difference, think about the conversations you have with Alexa or Siri. Typically, these digital assistants ask you to help buy a ticket, check the weather, or play a song. It’s a goal-oriented conversation that has become a major focus of academic and industrial research as computer scientists try to extract something useful from computers’ ability to scan human speech.

Although they used the same technology as previous social chat programs, Khodanpour said, “You can’t really call them chatbots. You can call them voice assistants or just digital assistants that help you with specific tasks.”

He added that the technology was “quiet” for decades until the Internet became widely adopted. “The big breakthrough probably came in this millennium,” Khodanpour said. “With the rise of companies that have successfully employed some form of computerized agent to perform routine tasks.”

“People are always upset when their bags are lost, and the human customers they deal with are always stressed by all that negativity, so they said, ‘Let’s put it on a computer,'” Khodanpour said. You can yell at the computer all you want, all you want to know is, “Do you have your card number so I can tell you where your bag is? “

Back to social chat programs and social issues

In the early 2000s, researchers began to rethink the development of social chatbots that could hold long conversations with humans. These chatbots, often trained on vast amounts of data from the web, have learned to be very good simulations of human speech – but they also run the risk of reproducing some of the web’s worst.

“The more you talk to Tay, the smarter you become, so the experience can be more personal to you,” Microsoft said at the time.

These breaks are echoed by other tech giants that have released public chatbots, including the Meta Blenderbot 3 released earlier this month. The meta-chatbot falsely claimed that Donald Trump is still president and there is “absolutely overwhelming evidence” of vote theft, among other controversial statements.

Blenderbot 3 claims to be more than just a bot.

Despite all the progress since Elijah and the vast amount of new data on which to train these language processing programs, New York University professor Marcus says: “I don’t see how you can build a really reliable and secure chatbot.”

Khodanpur, on the other hand, is optimistic about its potential uses. “I have a whole vision of how AI can empower people at the individual level,” he said. “Imagine if my robot could read all the scientific articles in my field, I wouldn’t have to read them all, I would just think, ask questions and have a dialogue,” he said. “In other words, I’ll have an alternate psyche with built-in superpowers.”